Confusion Matrix Calculator (simple to use)

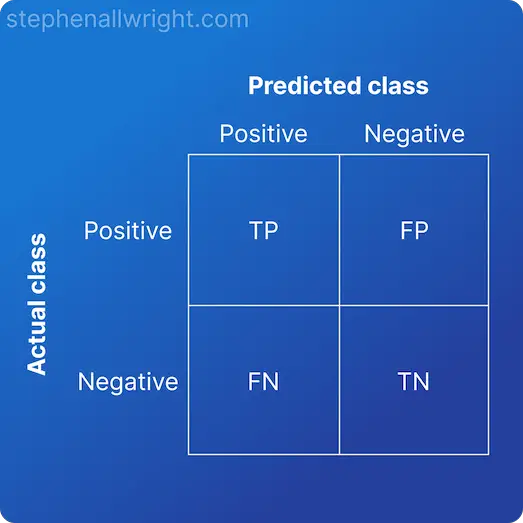

The confusion matrix is a method of measuring the performance of classification machine learning models using the True Positive, False Positive, True Negative, and False Negative values. These four values can be used to calculate a set of metrics that describe different aspects of model performance.

The confusion matrix is a method of measuring the performance of classification machine learning models using the True Positive, False Positive, True Negative, and False Negative values. These four values can be used to calculate a set of metrics that describe different aspects of model performance.

The metrics calculated in this calculator are F1 score, accuracy, balanced accuracy, precision, recall, and sensitivity.

This calculator will calculate these metrics using both typical confusion matrix values, but also from lists of predictions and their corresponding actual values.

Related articles

Metric comparisons

AUC vs accuracy

Accuracy vs balanced accuracy

F1 score vs AUC

F1 score vs accuracy

Micro vs Macro F1 score

Metric calculators

F1 score calculator

Accuracy calculator

Confusion matrix maker

Precision recall calculator