What is a good F1 score and how do I interpret it?

F1 score (also known as F-measure, or balanced F-score) is a metric used to measure the performance of classification machine learning models. It is a popular metric to use for classification models as it provides robust results for both balanced and imbalanced datasets, unlike accuracy.

F1 score is a commonly used metric for classification machine learning models, but it’s definition is not widely understood which can make it difficult know what a good score actually is. In this post I explain what F1 is, how to implement it, and what a good score is.

What is F1 score?

F1 score (also known as F-measure, or balanced F-score) is an error metric which measures model performance by calculating the harmonic mean of precision and recall for the minority positive class.

It is a popular metric to use for classification models as it provides accurate results for both balanced and imbalanced datasets, and takes into account both the precision and recall ability of the model.

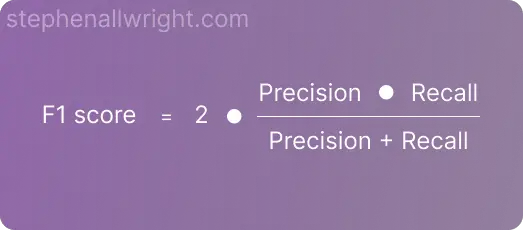

The formula for F1 score is as follows:

What does F1 score tell you?

F1 score is the harmonic mean of precision and recall, which means that the F1 score will tell you the model’s balanced ability to both capture positive cases (recall) and be accurate with the cases it does capture (precision).

The benefit of F1’s approach to measuring model performance is that it takes into the model’s ability over two attributes, which makes it a robust gage of model performance. This is in stark contrast to just measuring the absolute amount of correct predictions, as with another popular metric, accuracy.

Is F1 score good for imbalanced datasets?

F1 score is still able to relay true model performance when the dataset is imbalanced, which is one of the reasons it is such a common metric to use. F1 is able to do this because it is calculated as the harmonic mean of both precision and recall for the minority positive class.

In situations with highly skewed datasets, a poorly performing model which only predicts the majority class will seem accurate on other metrics, such as accuracy. However, the true poor performance will be shown in F1 score as the model will have neither a good precision or recall on the positive class.

How do I calculate F1 score in Python?

F1 is a simple metric to implement in Python through the scikit-learn package. See below a simple example:

from sklearn.metrics import f1_score

y_true = [0, 1, 0, 0, 1, 1]

y_pred = [0, 0, 1, 0, 0, 1]

f1 = f1_score(y_true, y_pred)What is a good F1 score?

F1 score ranges from 0 to 1, where 0 is the worst possible score and 1 is a perfect score indicating that the model predicts each observation correctly.

A good F1 score is dependent on the data you are working with and the use case. For example, a model predicting the occurrence of a disease would have a very different expectation than a customer churn model. However, there is a general rule of thumb when it comes to F1 scores, which is as follows:

| F1 score | Interpretation |

|---|---|

| > 0.9 | Very good |

| 0.8 - 0.9 | Good |

| 0.5 - 0.8 | OK |

| < 0.5 | Not good |

Is F1 score a good metric to use?

F1 score is the go to metric for measuring the performance of classification models. This is mainly due to it’s ability to relay true performance on both balanced and imbalanced datasets. But also because it takes into account both the precision and recall ability of the model, making it a well rounded assessor of model performance.

Related articles

Classification metrics

F1 score calculator

Interpretation of F1 score

AUC score

Accuracy score

Balanced accuracy

Classification metrics for imbalanced data

Metric comparisons

F1 score vs AUC

F1 score vs accuracy

Micro vs Macro F1 score

AUC vs accuracy

Accuracy vs balanced accuracy