What is a good MSE value? (simply explained)

Mean Squared Error (MSE) is a machine learning metric for regression models, but it can be confusing to know what a good value is. In this post, I will explain what MSE is, how to calculate it, and what a good value actually is.

Mean Squared Error (MSE) is a machine learning metric for regression models, but it can be confusing to know what a good value is. In this post, I will explain what MSE is, how to calculate it, and what a good value actually is.

What is MSE?

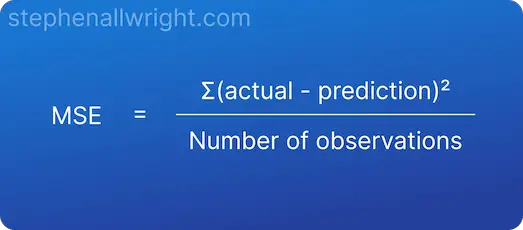

Mean Squared Error (MSE) is the average squared error between actual and predicted values.

Squared error, also known as L2 loss, is a row-level error calculation where the difference between the prediction and the actual is squared. MSE is the aggregated mean of these errors, which helps us understand the model performance over the whole dataset.

The main draw for using MSE is that it squares the error, which results in large errors being punished or clearly highlighted. It’s therefore useful when working on models where occasional large errors must be minimised.

The formula for calculating MSE is:

Example of calculating MSE

Let’s look at an example of calculating MSE for a regression model which predicts house prices:

| Actual | Prediction | Squared Error |

|---|---|---|

| 100,000 | 90,000 | 100,000,000 |

| 200,000 | 210,000 | 100,000,000 |

| 150,000 | 155,000 | 25,000,000 |

| 180,000 | 178,000 | 4,000,000 |

| 120,000 | 121,000 | 1,000,000 |

MSE = (100,000,000 + 100,000,000 + 25,000,000 + 4,000,000 + 1,000,000) / 5 = 46,000,000

You can see that the error is not returned on the same scale as the target, therefore making it difficult to interpret its meaning.

When should you use MSE?

MSE is a popular metric to use for evaluating regression models, but there are also some disadvantages you should be aware of when deciding whether to use it or not:

Advantages of using MSE

- Easy to calculate in Python

- Simple to understand calculation for end users

- Designed to punish large errors

Disadvantages of using MSE

- Error value not given in terms of the target

- Difficult to interpret

- Not comparable across use cases

Calculate MSE in Python with sklearn

MSE is an incredibly simple metric to calculate. If you are using Python it is easily implemented by using the scikit-learn package. An example can be seen here:

from sklearn.metrics import mean_squared_error

y_true = [10, -5, 4, 15]

y_pred = [8, -1, 5, 13]

mse = mean_squared_error(y_true, y_pred)

What is a good MSE value?

The closer your MSE value is to 0, the more accurate your model is. However, there is no 'good' value for MSE. It is an absolute value which is unique to each dataset and can only be used to say whether the model has become more or less accurate than a previous run.

Can MSE be used to compare models?

MSE cannot be used to compare different models from different datasets as it’s an absolute value that is only relevant to that given dataset. If you need to compare models across different datasets then it would be best to use percentage metrics such as MAPE.

What is a normal MSE?

There is no MSE value which is considered ‘normal’ as it’s an absolute error score which is unique to that model and dataset. For example, a house price prediction model will have much larger MSE values than a model which predicts height, as they are predicting for very different scales.

Is lower MSE better?

The lower the MSE value there more accurate the model is. Lower is of course a relative term, so it’s important to know that MSE values can only be compared to other MSE values calculated for that same dataset, as MSE is an absolute metric unique to each use case.

Can MSE be greater than 1?

MSE is a metric which ranges from 0 to infinity, and can therefore be greater than 1.

Related articles

Metric calculators

Regression metrics

RMSE

MAE score

R-Squared

MAPE score

MDAPE

Metric comparisons

RMSE vs MSE, which should I use?

MSE vs MAE, which is the better regression metric?