What is a good AUC score? (simply explained)

AUC score (also known as ROC AUC score) is a classification machine learning metric, but it can be confusing to know what a good score is. In this post, I explain what AUC score is, how to calculate it, and what a good score actually is.

AUC score (also known as ROC AUC score) is a classification machine learning metric, but it can be confusing to know what a good score is. In this post, I explain what AUC score is, how to calculate it, and what a good score actually is.

What is AUC score?

AUC is a common abbreviation for Area Under the Receiver Operating Characteristic Curve (ROC AUC). It’s a metric used to assess the performance of classification machine learning models.

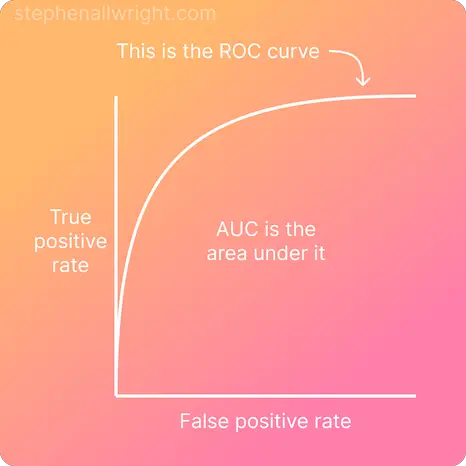

The ROC is a graph which maps the relationship between true positive rate (TPR) and the false positive rate (FPR), showing the TPR that we can expect to receive for a given trade-off with FPR. The AUC score is the area under this ROC curve, meaning that the resulting score represents in broad terms the model's ability to predict classes correctly.

What are the positives and negatives of AUC score?

AUC score is a very common metric to use when developing classification models, however there are some aspects to keep in mind when using it:

Advantages of using AUC score

- Simple to calculate overall performance metric for classification models

- A single metric which covers both sensitivity and specificity

- Performs well on imbalanced datasets

Disadvantages of using AUC score

- Not very intuitive for end users to understand

- Difficult to interpret

How do I calculate AUC score in Python using scikit-learn?

AUC score is a simple metric to calculate in Python with the help of the scikit-learn package. See below a simple example for binary classification:

from sklearn.metrics import roc_auc_score

y_true = [0,1,1,0,0,1]

y_pred = [0,0,1,1,0,1]

auc = roc_auc_score(y_true, y_pred)

What is a good AUC score?

The AUC score ranges from 0 to 1, where 1 is a perfect score and 0.5 means the model is as good as random.

As with all metrics, a good score depends on the use case and the dataset being used, medical use cases for example require a much higher score than e-commerce. However, a good rule of thumb for what a good AUC score is:

| AUC score | Interpretation |

|---|---|

| >0.8 | Very good performance |

| 0.7-0.8 | Good performance |

| 0.5-0.7 | OK performance |

| 0.5 | As good as random choice |

Is a higher AUC score better?

The higher the AUC score the more accurate the model is at predicting the correct class, where 1 is the best possible score.

What is the maximum possible AUC value?

The maximum possible AUC value that you can achieve is 1. This is the perfect score and would mean that your model is predicting each observation into the correct class.

How can I improve my AUC score?

To improve your AUC score there are three things that you could do:

- Add more features to your dataset which provide some signal for the target

- Tweak your model by adjusting parameters or the type of model used

- Change the probability threshold at which the classes are chosen

Related articles

Classification metrics

Interpret AUC score

F1 score

Accuracy

Balanced accuracy

Classification metrics for imbalanced data

Metric comparisons

F1 score vs AUC

AUC vs accuracy

Metric calculators

Confusion matrix calculator

Precision recall calculator

References

scikit-learn roc_auc_score documentation

Receiver operating characteristic curve explainer