Metrics for imbalanced data (simply explained)

Imbalanced data is a common occurrence when working with classification machine learning models. In this post, I explain which metrics are best to use when its present in your dataset.

Imbalanced data is a common occurrence when working with classification machine learning models. In this post, I explain which metrics are best to use when its present in your dataset.

What is imbalanced data?

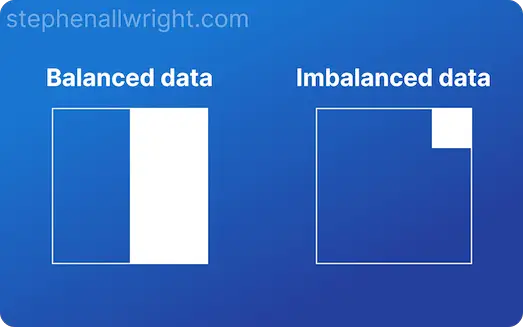

Imbalanced data refers to a situation, primarily in classification machine learning, where one target class represents a significant proportion of observations. Imbalanced data frequently occurs in real-world problems, so it’s a scenario that data scientists often have to deal with.

Why is imbalanced data a problem for evaluation metrics?

Imbalanced data can cause issues in understanding the performance of a model. When evaluating performance on imbalanced data, models that only predict well for the majority class will seem to be highly performant when looking at simple metrics such as accuracy, whilst in actuality the model is performing poorly.

This means that metric choice becomes even more important in these situations.

Example of imbalanced data classification metrics

Let’s look at an example of imbalanced data and how it can lead to misleading metrics.

We have the following dataset:

| Actual | Prediction | Correct |

|---|---|---|

| 1 | 0 | N |

| 0 | 0 | Y |

| 0 | 0 | Y |

| 0 | 0 | Y |

| 0 | 0 | Y |

| 0 | 0 | Y |

| 0 | 0 | Y |

| 0 | 0 | Y |

We can see that there is just one occurrence of the positive class, this would be classed as an imbalanced dataset.

This single observation has been incorrectly classified as the model only predicts for the majority negative class. This is clearly not a well-performing model, so we should expect our metrics to reflect this bad performance.

Let’s calculate some common metrics for this dataset:

Accuracy = 87.5%Balanced accuracy = 50%F1 score = 0

Here we can see that by only predicting for the majority class, it seems like the model is performing incredibly well when we look at Accuracy. On the other hand, Balanced Accuracy and F1 Score tell us that the model is performing poorly, which is what we know to be true.

This demonstrates the importance of choosing the right metrics, to truly understand performance.

Metrics for imbalanced data

We’ve seen so far the importance of choosing the correct metric for imbalanced datasets, as it’s possible to be misled during model evaluation. Given this, the most common metrics to use for imbalanced datasets are:

- Marco F1 score

- AUC score (AUC ROC)

- Average precision score (AP)

- G-Mean

The common factor for all of these metrics is that they take into account the model performance for each class, instead of looking at the summarised performance.

Why is accuracy not good for imbalanced dataset?

Accuracy is not a good metric for imbalanced datasets. Say we have an imbalanced dataset and a badly performing model which always predicts for the majority class. This model would receive a very good accuracy score as it predicted correctly for the majority of observations, but this hides the true performance of the model which is objectively not good as it only predicts for one class.

Which is the best metric for imbalanced data?

In general, it is good practice to track multiple metrics when developing a machine learning model as each highlights different aspects of model performance. However if one needed to choose one metric to use as a north star metric then I would recommend Macro F1 score as this is a good all-around metric which balances precision and recall whilst also performing well on imbalanced datasets.

Related articles

Metric comparisons

AUC vs accuracy

Accuracy vs balanced accuracy

F1 score vs AUC

Micro vs Macro F1 score

Metric calculators

F1 score calculator

Accuracy calculator

Confusion matrix calculator

Model evaluation

Using cross_val_score for model evaluation

Using cross_validate for model evaluation