L1 vs L2 loss functions, which is best to use?

L1 and L2 are loss functions used in regression machine learning models. They are often discussed in the same context so it can be difficult to know which to choose for a given project.

L1 and L2 are loss functions used in regression machine learning models. They are often discussed in the same context so it can be difficult to know which to choose for a given project. In this post I will explain what they are, the differences and similarities, and hopefully help you choose one for your project.

L1 vs L2 loss functions, what are they?

L1 and L2 are both loss functions, these functions are applied to each observation in a dataset to measure how accurate a given prediction is in comparison to the actual value. The resulting loss values can then be aggregated to a dataset level where they would give an indication of the model accuracy over the whole dataset. This aggregation is called the cost function.

But, what are L1 and L2?

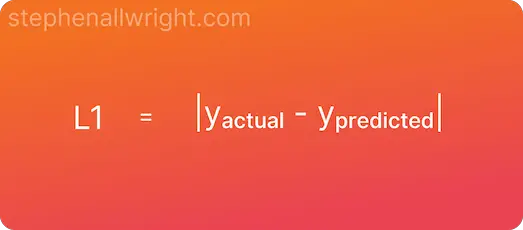

L1, also known as the Absolute Error Loss, is the absolute difference between the prediction and the actual.

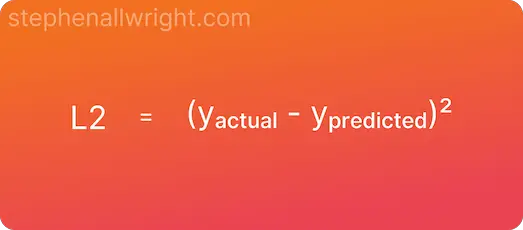

L2, also known as the Squared Error Loss, is the squared difference between the prediction and the actual.

Mathematical formulas for L1 and L2 loss

The difference between the functions can begin to be seen clearly in their respective formulas.

The L1 loss function formula is:

The L2 loss function formula is:

The key difference here is that L2 is squaring the difference, whilst L1 is simply the absolute difference.

Example calculation of L1 and L2 loss

Let’s put these formulas into practice and have a look at an example. We will calculate the L1 and L2 loss for a model which is seeking to predict house prices.

| Predicted price | Actual price | L1 loss | L2 loss |

|---|---|---|---|

| 100,000 | 105,000 | 5,000 | 25,000,000 |

| 120,000 | 118,000 | 2,000 | 4,000,000 |

| 220,000 | 170,000 | 50,000 | 2,500,000,000 |

The difference between the two losses is very evident when we look at the outlier in the dataset. The L2 loss for this observation is considerably larger relative to the other observations than it was with the L1 loss. This is the key differentiator between the two loss functions.

Implementing L1 and L2 loss in Python

Both functions are very simple to implement in Python using the Numpy package. Here is a simple example:

import numpy as np

actual = np.array([10, 11, 12, 13])

prediction = np.array([10, 12, 14, 11])

l1_loss = abs(actual - prediction)

"""

Output:

[0 1 2 2]

"""

l2_loss = (actual - prediction) ** 2

"""

Output:

[0 1 4 4]

"""

Similarities of L1 and L2 loss

Given the confusion between which loss function to use, there are of course a few key similarities. These are:

- Both are relatively simple calculations for the difference between the prediction and actual

- Both return values in the same scale as the model target, making it difficult to compare these values across different datasets

- Both are easily implemented in Python

Differences of L1 and L2 loss

There is one key difference between L1 and L2, this is:

- They handle outliers differently. L2 is much more sensitive to outliers because the differences are squared, whilst L1 is the absolute difference and is therefore not as sensitive

L1 vs L2 loss, which is better?

We’ve learned about how to calculate L1 and L2, and what the similarities and differences are, but which is actually better to use?

The choice between L1 and L2 comes down to how much you want to punish outliers in your predictions. If minimising large outliers is important for your model then L2 is best as this will highlight them more due to the squaring, however if occasional large outliers are not an issue then L1 may be best.

Related articles

Loss function vs cost function, what’s the difference?