Fit Predict #2: Fluff your SQL and don't be imbalanced

In this issue of the Fit Predict newsletter, we look at fixing imbalanced datasets, running causal inference, the reality of working as a Data Scientist, and more.

This is a light-hearted overview of what's been going on in the world of Data Science this week. See it as your 5-minute update such that you can sound at least slightly knowledgeable at your next coffee chat ☕

Have you been forwarded this? You can subscribe here!

Hey there,

If you're anything like me, you'll just about be getting back into the swing of things after the Christmas holidays. Coming back to work is always a bit of a shock to the system, that's for sure. Going from solving sudokus by a log fire, to putting out fires in production models can be rather jarring. 🥴

On that fire I put out in production, let me share a completely new and unique learning from that experience /s . 👨🏫

It's not a smart idea to drastically increase your dataset size and make significant changes to your training pipeline the day before you sign off for 2 weeks. If you do, you are likely to find yourself debugging in your parent's kitchen at 11 pm on their dial-up speed wifi. 🙃

You're welcome.

So, now that the model is running smoothly in production, let's get on with the newsletter!

🧰 Tools

The tools that will make your life that little bit easier, or at least more interesting... but either way it's fun to play with new toys.

Imbalanced data is a common problem that needs to be contended with when developing machine learning models. This package provides various out-of-the-box techniques to deal with it, and it's compatible with scitkit-learn which is handy.

This package from PyMC Labs is for running causal inference using Bayesian models. It's still in beta, but it would be fun to test out.

I've been using a linter in Python for a long time now, but I've never considered using one for my SQL scripts, until recently. I was introduced to this linter, which I'm happy to say is making my queries look smoother than ever.

🧑🔬 In practice

Stories of those who are genuinely implementing Data Science. Step aside Titanic dataset, this is the real deal.

This year's Wrapped from Spotify included a "Listening Personality", which it turns out has a very interesting set of calculations behind it. One can only dream of having this kind of data available to work with!

Managing customer service at Airbnb's scale must be a real challenge. To tackle it, they're employing some interesting machine learning techniques and products.

A common trap that we fall into is to try and improve metrics by adding features, but it can be just as effective to remove features instead. This story from Facebook is a great example of that.

🐦 The best of Data Twitter

Data Twitter is the best Twitter.

from @mattturck

Unless the two things are actually causally related, in which case it does

But even when that's the case, it might not be"

Causal inference, everyone!

from @PhDemetri

💭 Thought-provoking

Content to inspire, or at the very least keep you informed.

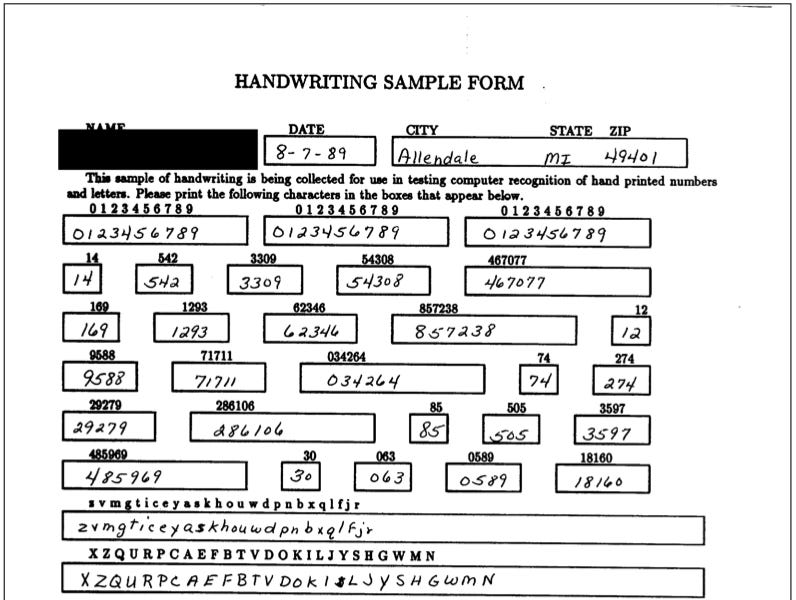

Chris Albon's new newsletter, Short Notes on AI, was launched last week and the first issue covers the famous MNIST dataset. It's a fascinating history lesson on one of the cornerstone datasets in our industry.

These two blog posts, the latter inspired by the former, are brutally honest portrayals of how being a Data Scientist can look in reality. I must say, that this is personally not my experience, but I learned about some red flags to look out for at least!

🔧 Updates

Did you know that your favourite Python packages actually get updated regularly and you should update your requirements.txt file?

The new version of Meta's self-supervised learning model, data2vec, is out and promises major significant performance improvements.