RMSE vs MSE, what's the difference?

RMSE and MSE are both metrics for measuring the performance of regression machine learning models, but what’s the difference? In this post, I will explain what these metrics are, their differences, and help you decide which is best for your project.

RMSE and MSE are both metrics for measuring the performance of regression machine learning models, but what’s the difference? In this post, I will explain what these metrics are, their differences, and help you decide which is best for your project.

RMSE vs MSE, what are they?

Root Mean Squared Error (RMSE) and Mean Squared Error (MSE) are both regression metrics and are in fact related as RMSE uses the MSE calculation as its basis. Let’s look further at their definitions.

What is MSE?

MSE (Mean Squared Error) is the average squared error between actual and predicted values.

Squared error, also known as L2 loss, is a row-level error calculation where the difference between the prediction and the actual is squared. MSE is the aggregated mean of these errors, which helps us understand the model performance over the whole dataset.

The main draw for using MSE is that it squares the error, which results in large errors being punished or clearly highlighted. It’s therefore useful when working on models where occasional large errors must be minimised.

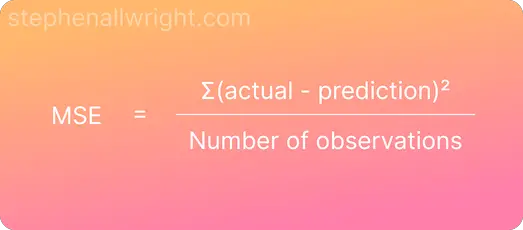

The formula for calculating MSE is:

What is RMSE?

Root Mean Squared Error (RMSE) is the square root of the mean squared error (MSE) between the predicted and actual values.

A benefit of using RMSE is that the metric it produces is in terms of the unit being predicted. For example, using RMSE in a house price prediction model would give the error in terms of house price, which can help end users easily understand model performance.

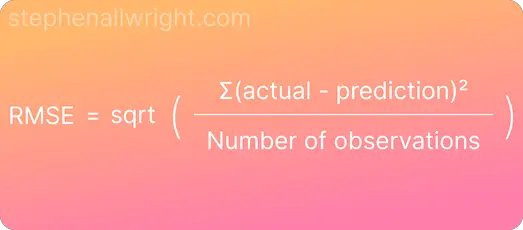

The formula for calculating RMSE is:

Using RMSE and MSE in Python with Numpy and Sklearn

Implementing RMSE and MSE in Python is a simple task by utilising the scikit-learn package. Scikit-learn doesn’t have a function specifically for RMSE, so to calculate this we will use the Numpy package in addition.

from sklearn.metrics import mean_squared_error

import numpy as np

y_true = [10, -5, 4, 15]

y_pred = [8, -1, 5, 13]

mse = mean_squared_error(y_true, y_pred)

rmse = np.sqrt(mse)

What is the difference between RMSE and MSE?

Whilst they are based on the same calculation, there are some key differences that you should be aware of when comparing RMSE and MSE. These are:

- RMSE returns the error in terms of the target it is predicting, whereas MSE does not and is thus much more difficult to interpret

- MSE is more sensitive to outliers in absolute terms as it is the mean of the squared difference. RMSE on the other hand takes the square root of this same error which means that larger errors will contribute less in absolute terms than in MSE.

Example of calculating RMSE and MSE

Let’s look at an example of using RMSE and MSE for a regression model which seeks to predict house prices.

| Actual | Prediction | Squared Error |

|---|---|---|

| 100,000 | 90,000 | 100,000,000 |

| 200,000 | 210,000 | 100,000,000 |

| 150,000 | 155,000 | 25,000,000 |

| 180,000 | 178,000 | 4,000,000 |

| 120,000 | 121,000 | 1,000,000 |

MSE = (100,000,000 + 100,000,000 + 25,000,000 + 4,000,000 + 1,000,000) / 5 = 46,000,000

RMSE = sqrt[(100,000,000 + 100,000,000 + 25,000,000 + 4,000,000 + 1,000,000) / 5] = 6,782

Here you can see that RMSE is given in terms of the target we are predicting for (house price), whilst MSE isn’t and is subsequently much more difficult to interpret.

When to use RMSE vs MSE?

The key differences between RMSE and MSE are their interpretation and their behaviour on outliers. Given this, RMSE should be used when you need to communicate your results in an understandable way to end users or when penalising outliers is less of a priority.

Why do we use RMSE instead of MSE?

RMSE is one of the most common metrics to use when working with regression models and is often preferred over MSE. The reason for this is primarily the much greater interpretation of the resulting number, which makes it much easier to understand the objective performance of the model.

Is RMSE better than MSE?

Which metric is best is dependent upon your use case and priorities. However, RMSE is often the go-to metric for regression models. This is primarily due to it being interpretable by both the creator of the model and end users alike as the error is given in terms of the target.

Related articles

Regression metrics

MAE score

R-Squared score

MAPE score

Interpret RMSE

Interpret MSE

Regression metric comparisons

RMSE vs MAE

MSE vs MAE

RMSE vs MAPE

MSE vs MAE