Loss function vs cost function, what’s the difference?

Loss function and cost function are two terms that are used in similar contexts within machine learning, which can lead to confusion as to what the difference is. In this post I will explain what they are, their similarities, and their differences.

Loss function and cost function are two terms that are used in similar contexts within machine learning, which can lead to confusion as to what the difference is. In this post I will explain what they are, their similarities, and their differences.

What are loss and cost functions in machine learning?

Loss and cost functions are methods of measuring the error in machine learning predictions. Loss functions measure the error per observation, whilst cost functions measure the error over all observations.

What is a loss function?

A loss function is a function which measures the error between a single prediction and the corresponding actual value. Common loss functions to use are L1 loss and L2 loss.

Loss function example

To illustrate how to use a loss function, I will calculate the L1 loss on a set of house price predictions. The L1 loss is simply the absolute difference between the prediction and the actual value:

| Actual | Prediction | L1 loss |

|---|---|---|

| 100,000 | 90,000 | 10,000 |

| 200,000 | 210,000 | 10,000 |

| 150,000 | 155,000 | 5,000 |

| 180,000 | 178,000 | 2,000 |

| 120,000 | 121,000 | 1,000 |

Here you can see that we are calculating the L1 loss, absolute difference, per observation.

What is a cost function?

A cost function is a function which measures the error between predictions and their actual values across the whole dataset. To do this it aggregates the loss values that are calculated per observation. Common cost functions to use are MAE and MSE.

Cost function example

To illustrate how to implement a cost function we’ll use the set of predictions from the loss function example, which are:

| Actual | Prediction | L1 loss |

|---|---|---|

| 100,000 | 90,000 | 10,000 |

| 200,000 | 210,000 | 10,000 |

| 150,000 | 155,000 | 5,000 |

| 180,000 | 178,000 | 2,000 |

| 120,000 | 121,000 | 1,000 |

Let’s calculate the MAE cost function on these predictions, which is simply the mean of all the L1 losses we calculated in the previous example. Therefore the MAE cost function will be:

MAE cost = (10,000 + 10,000 + 5,000 + 2,000 + 1,000)/5 = 5,600

Are cost function and loss function the same?

Cost function is not the same as loss function. The loss function calculates the error per observation, whilst the cost function calculates the error over the whole dataset. Data scientists often use these two terms interchangeably, but they are not the same.

What is the difference between cost function and loss function?

Both the cost and the loss function calculate prediction error, but the key difference is the level at which they calculate this error. A loss function calculates the error per observation, whilst the cost function calculates the error for all observations by aggregating the loss values.

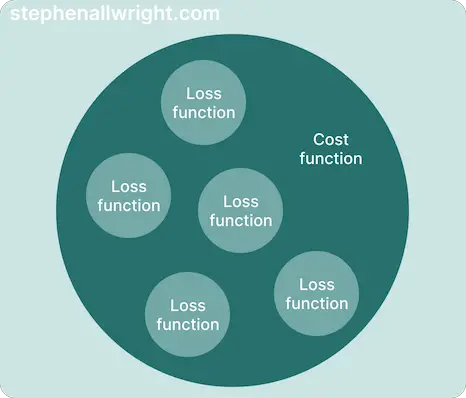

Essentially the cost function is a result of all the loss functions. A helpful way to visualise this would be as follows:

Related articles

L1 loss function

L2 loss function

L1 vs L2 loss functions

Can percent error be negative?