Accuracy vs balanced accuracy, which is the best metric?

Accuracy and balanced accuracy are both metrics for classification machine learning models. These similarly named metrics are often discussed in the same context, so it can be confusing to know which to use for your project. In this post I will explain which you should use for your project.

Accuracy and balanced accuracy are metrics for classification machine learning models. These similarly named metrics are often discussed in the same context, so it can be confusing to know which to use for your project. In this post I will explain what they are, their similarities and differences, and which you should use for your project.

What are accuracy and balanced accuracy?

Accuracy and balanced accuracy are metrics which measure a classification model’s ability to predict correct classes. The resulting metrics they produce are referred to as balanced accuracy score and accuracy score.

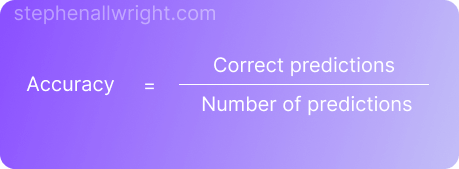

What does accuracy score mean?

Accuracy score is one of the simplest metrics available to us for classification models. It is the number of correct predictions as a percentage of the number of observations in the dataset. The score ranges from 0% to 100%, where 100% is a perfect score and 0% is the worst.

The formula for calculating accuracy score is:

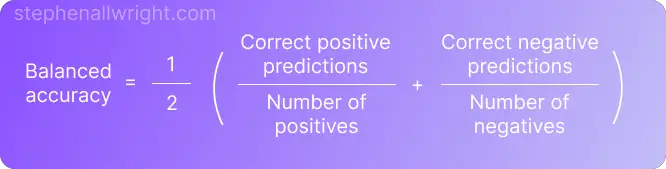

What does balanced accuracy score mean?

Balanced accuracy score is a further development on the standard accuracy metric where it's adjusted to perform better on imbalanced datasets. The way it does this is by calculating the average accuracy for each class, instead of combining them as is the case with standard accuracy. The score ranges from 0% to 100%, where 100% is a perfect score and 0% is the worst.

The formula for calculating balanced accuracy for a two class model can be seen here:

Similarities and differences of accuracy and balanced accuracy

Given that both accuracy and balanced accuracy are metrics derived from a similar concept, there are some obvious similarities. However there are some key differences that you should be aware of when choosing between them.

Similarities between accuracy and balanced accuracy

- Both are metrics for classification models

- Both are easily implemented using the scikit-learn package

Differences between accuracy and balanced accuracy

- Balanced accuracy works well with imbalanced datasets whilst accuracy performs poorly in these situations, often leading to misleading results

- Balanced accuracy takes into account the model’s recall ability across all classes, whilst accuracy does not and is much more simplistic

- Accuracy is widely understood by end users whilst balanced accuracy often requires some explanation

Accuracy vs balanced accuracy on imbalanced datasets

The key difference between these metrics is the behaviour on imbalanced datasets, this can be seen very clearly in this worked example.

Behaviour on a balanced dataset

| Prediction | Actual |

|---|---|

| 1 | 0 |

| 1 | 1 |

| 1 | 1 |

| 0 | 1 |

| 1 | 1 |

| 0 | 1 |

| 1 | 0 |

| 1 | 1 |

Accuracy = 50%

Balanced accuracy = 50%

In this perfectly balanced dataset the metrics are the same. Both are communicating the model’s genuine performance which is that it’s predicting 50% of the observations correctly for both classes.

Behaviour on an imbalanced dataset

| Prediction | Actual |

|---|---|

| 1 | 0 |

| 1 | 1 |

| 1 | 1 |

| 0 | 1 |

| 1 | 1 |

| 1 | 1 |

| 0 | 1 |

| 1 | 1 |

Accuracy = 62.5%

Balanced accuracy = 35.7%

In this very imbalanced dataset there is a significant difference in the metrics. Accuracy seems to show that the model performs quite well whilst balanced accuracy is telling us the opposite. But which is correct? Well, both are correct according to their definitions, but if we want a metric which communicates how well a model is objectively performing then balanced accuracy is doing this for us.

The predictions table shows that the model is predicting the positive cases fairly well but has failed to pick up the negative case, this is objectively poor performance from a model which needs to accurately classify both classes. Therefore, we would want to be tracking balanced accuracy in this case to get a true understanding of model performance.

This example shows the trap that you can fall into by following accuracy as your main metric, and the benefit of using a metric which works well for imbalanced datasets.

How to implement accuracy and balanced accuracy in Python

Accuracy and balanced accuracy are both simple to implement in Python, but first let’s look at how using these metrics would fit into a typical development workflow:

- Create a prepared dataset

- Separate the dataset into training and testing

- Choose your model and run hyper-parameter tuning on the training dataset

- Run cross validation with your model on the training dataset using accuracy or balanced accuracy as metrics

- Train your final model on the full training dataset

- Test your final model on the test dataset using accuracy or balanced accuracy as metrics

We can see that we would use our metrics of choice in two places. The first being during the cross validation phase, and the second being at the end when we want to test our final model.

I will show a much simpler example than the full workflow shown above, which just illustrates how to call the required functions:

from sklearn.metrics import balanced_accuracy_score, accuracy_score

y_true = [1,0,0,1,0]

y_pred = [1,1,0,0,1]

balanced_accuracy = balanced_accuracy_score(y_true,y_pred)

accuracy = accuracy_score(y_true, y_pred)

Accuracy vs balanced accuracy, which is the best metric?

I would recommend using balanced accuracy over accuracy as it is performs similarly to accuracy on balanced datasets but is still able to reflect true model performance on imbalanced datasets, something that accuracy is very poor at. If you have to use accuracy for reporting purposes, then I would recommend tracking other metrics alongside it such as balanced accuracy, F1, or AUC.

Related articles

Classification metrics for imbalanced data

Classification metric comparsions

AUC vs accuracy

F1 score vs AUC

F1 score vs accuracy

Micro vs Macro F1 score

Metric calculators

References

Accuracy sklearn documentation

Balanced accuracy sklearn documentation