F1 score vs accuracy, which is the best classification metric?

F1 score and accuracy are machine learning metrics for classification models, but which should you use for your project? In this post I will explain what they are, their differences, and help you decide which is the best choice for you.

F1 score and accuracy are machine learning metrics for classification models, but which should you use for your project? In this post I will explain what they are, their differences, and help you decide which is the best choice for you.

Is F1 score the same as accuracy?

F1 score and accuracy are often discussed in similar contexts and have the same end goal, but they are not the same and have very different approaches to measuring model performance.

What is F1 score?

F1 score (also known as F-measure, or balanced F-score) is an error metric whose score ranges from 0 to 1, where 0 is the worst and 1 is the best possible score.

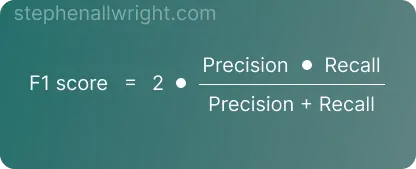

It is a popular metric to use for classification models as it provides robust results for imbalanced datasets and evaluates both the recall and precision ability of the model. The reason F1 is able to evaluate a model's precision and recall ability is due to the way it is derived, which is as follows:

Because of this calculation, F1 score is sometimes referred to as the harmonic mean of precision and recall.

What is accuracy?

Accuracy is a simple and widely understood error metric whose score ranges from 0% to 100%, where 100% is a perfect score and 0% is the worst.

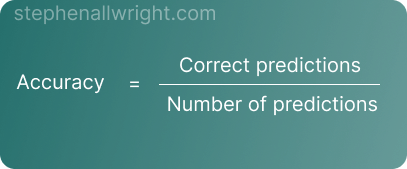

It is calculated as the number of correct predictions as a percentage of the number of observations in the dataset. This simplicity creates problems when the classes are imbalanced as a model which only predicts for the majority class will seem to be performing well according to it’s accuracy score.

The formula for accuracy is:

What is the difference between F1 score and accuracy?

The key differences between F1 score and accuracy are their performance on imbalanced datasets and the ease of communicating results to end users.

Let’s look at each of these further:

Difference between F1 score and accuracy on imbalanced datasets

The most important difference to be aware of is their behaviour on imbalanced datasets.

Accuracy does not perform well on imbalanced datasets which often leads to misleading results, whilst F1 is still able to measure performance objectively when the class balance is skewed.

F1 score vs accuracy, which is easiest to understand?

F1 score and accuracy both measure model performance, but their calculations differ in complexity which can make communicating results to end users challenging.

F1 score’s definition can be complicated for end users to understand, and would require an explanation around the difference between precision and recall. On the other hand, accuracy is universally understood which makes it much easier to use when communicating model results.

When is F1 score better than accuracy?

Now that we have looked at their key differences, how does this impact when you should use one or the other?

When should you use F1 score?

F1 score should be used when you have an imbalanced dataset or when communicating results to end users is not a key factor of the project

When should you use accuracy?

Accuracy should be used when the dataset is balanced or when communicating the results to end users is important

Using F1 score and accuracy in Python

These metrics are easy to implement in Python using the scikit-learn package. Let’s look at a simple example of the two in action:

from sklearn.metrics import f1_score, accuracy_score

y_true = [0, 1, 0, 0, 1, 1]

y_pred = [0, 0, 1, 0, 0, 1]

f1 = f1_score(y_true, y_pred)

accuracy = accuracy_score(y_true, y_pred)

Can F1 score and accuracy be the same?

F1 score and accuracy can produce the same number, especially when the dataset is balanced, but it’s important to remember that the numbers relate to different aspects of performance and are not the same.

As an example, say F1 and accuracy produce a score of 0.7.

For accuracy this means that 70% of the predictions were correct, but for F1 score it means that the harmonic mean of precision and recall is 0.7. They both indicate that model performance is good, but they are not the same.

Can F1 score be higher or lower than accuracy?

F1 score and accuracy can produce drastically different numbers, especially when the dataset is imbalanced.

In situations where the dataset classes are imbalanced, accuracy can produce results which do not accurately reflect the performance of the model, leading often to either very high or low scores. In these same situations, F1 is often still able to produce results which reflect true performance and so this can often lead to a large disparity between the two values

Is F1 score better than accuracy?

Whilst both accuracy and F1 score are helpful metrics to track when developing a model, the go to metric for classification models is still F1 score. This is due to it’s ability to provide reliable results for a wide range of datasets, whether imbalanced or not. Accuracy on the other hand struggles to perform well outside of well balanced datasets and is often just used for communication purposes.

Related articles

Classification metrics

AUC score

Balanced accuracy

How to interpret F1 score

Classification metrics for imbalanced data

Classification metrics comparisons

AUC vs accuracy

Accuracy vs balanced accuracy

F1 score vs AUC

Micro vs Macro F1 score

Metric calculators

Accuracy calculator

F1 score calculator