Micro vs Macro F1 score, what’s the difference?

Micro average and macro average are aggregation methods for F1 score, a metric which is used to measure the performance of classification machine learning models. They are often shown together, which can make it confusing to know what the difference is.

Micro average and macro average are aggregation methods for F1 score, a metric which is used to measure the performance of classification machine learning models. They are often shown together, which can make it confusing to know what the difference is. In this post I will explain what they are, their differences, and which you should use.

What are Micro and Macro F1 score?

F1 score is a metric which is calculated per class, which means that if you want to calculate the overall F1 score for a dataset with more than one class you will need to aggregate in some way. Micro and macro F1 score are two ways of doing this aggregation.

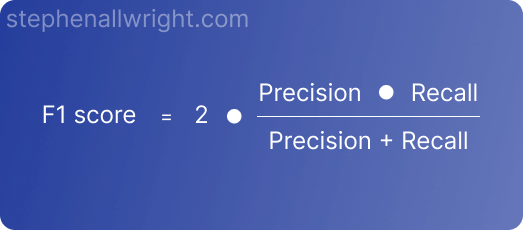

To lay the groundwork for our exploration, let’s clarify the definition of F1 score which in it’s most common form is:

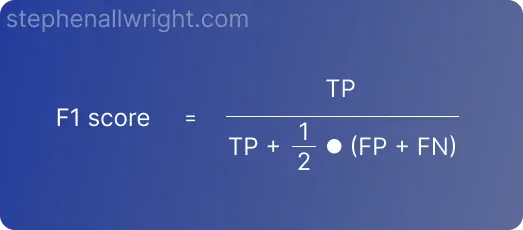

It can also be defined in terms of the raw True Positive (TP), False Positive (FP), and False Negative (FN) values, in which case it looks like this:

What is Macro F1 score?

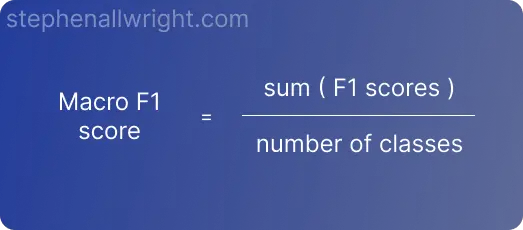

Macro F1 score is the unweighted mean of the F1 scores calculated per class. It is the simplest aggregation for F1 score.

The formula for macro F1 score is therefore:

Example of calculating Macro F1 score

Let’s look at an example of using macro F1 score.

Consider the following prediction results for a multi class problem:

| Class | TP | FP | FN | F1 score |

|---|---|---|---|---|

| 0 | 10 | 2 | 3 | 0.8 |

| 1 | 20 | 10 | 12 | 0.6 |

| 2 | 5 | 1 | 1 | 0.8 |

| Sum | 35 | 13 | 16 |

You can see that the normal F1 score has been calculated for each class. To return the macro F1 score all we need to do is calculate the mean of the three class F1 scores, which is:

Macro F1 score = (0.8+0.6+0.8)/3 = 0.73

What is Micro F1 score?

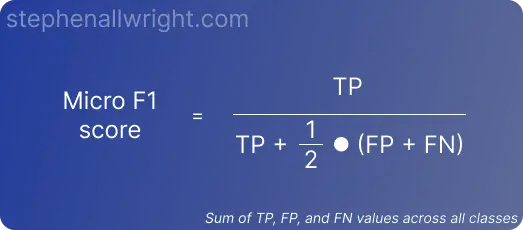

Micro F1 score is the normal F1 formula but calculated using the total number of True Positives (TP), False Positives (FP) and False Negatives (FN), instead of individually for each class.

The formula for micro F1 score is therefore:

Example of calculating Micro F1 score

Let’s look at an example of using micro F1 score.

Consider the same prediction results that we used for the previous example:

| Class | TP | FP | FN | F1 score |

|---|---|---|---|---|

| 0 | 10 | 2 | 3 | 0.8 |

| 1 | 20 | 10 | 12 | 0.6 |

| 2 | 5 | 1 | 1 | 0.8 |

| Sum | 35 | 13 | 16 |

The sum of TP, FP, and FN have been calculated. We can use these values to calculate the micro F1 score by plugging them into the formula we defined earlier:

Micro F1 score = 35 / (35 + 0.5 * (13 + 16)) = 0.71

Is Micro or Macro F1 score better for imbalanced datasets?

Micro average F1 score performs worse on imbalanced datasets than macro average F1 score. The reason for this is because micro F1 gives equal importance to each observation, whilst macro F1 gives each class equal importance.

When micro F1 score gives equal importance to each observation this means that when the classes are imbalanced, those classes with more observations will have a larger impact on the final score. Resulting in a final score which hides the performance of the minority classes and amplifies the majority.

On the other hand, macro F1 score gives equal importance to each class. This means that a majority class will contribute equally along with the minority, allowing macro f1 to still return objective results on imbalanced datasets.

Why is there no Micro average in the scikit-learn classification report?

When working with classification models it is common to create a classification report using scikit-learn which returns an overview of the predictions and key metrics. But in some cases micro average does not appear here and is instead labelled as accuracy .

Why is this?

Micro average F1 score is shown as accuracy in the classification report when the goal is single label classification. It does this because in this case the micro average F1 score returns the same value as accuracy.

The reason for this is that for single label classification, micro F1 score is calculating the ratio between correct predictions and number of predictions, which is essentially the same as the calculation for accuracy.

What is the difference between Micro and Macro F1 score?

The key difference between micro and macro F1 score is their behaviour on imbalanced datasets. Micro F1 score often doesn’t return an objective measure of model performance when the classes are imbalanced, whilst macro F1 score is able to do so.

Another difference between the two metrics is interpretation.

Given that micro average F1 score is essentially the same as accuracy for single label problems, it will be easier to communicate the results with end users as accuracy is a universally understood calculation. Macro F1 score on the other hand will be more difficult to communicate as it’s not as widely known.

Should I use Micro or Macro F1 score?

If you have an imbalanced dataset then you should use macro F1 score as this will still reflect true model performance even when the classes are skewed. However, if you have a balanced dataset then micro F1 score could be considered, especially if communicating the results with end users is important.

Related articles

Classification metrics

ROC AUC score

Accuracy score

Balanced accuracy

F1 score calculator

How to interpret F1 score meaning

Classification metric comparisons

AUC vs accuracy

Accuracy vs balanced accuracy

F1 score vs AUC

F1 score vs accuracy